Jean Bresson [personal page]

GitHub | HAL | Google Scholar | LinkedIn | Academia.eduProjects

Some other / past projects…

| OpenMusic (2003-2019) |

|---|

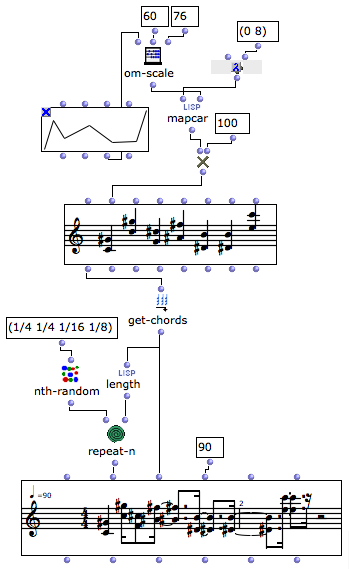

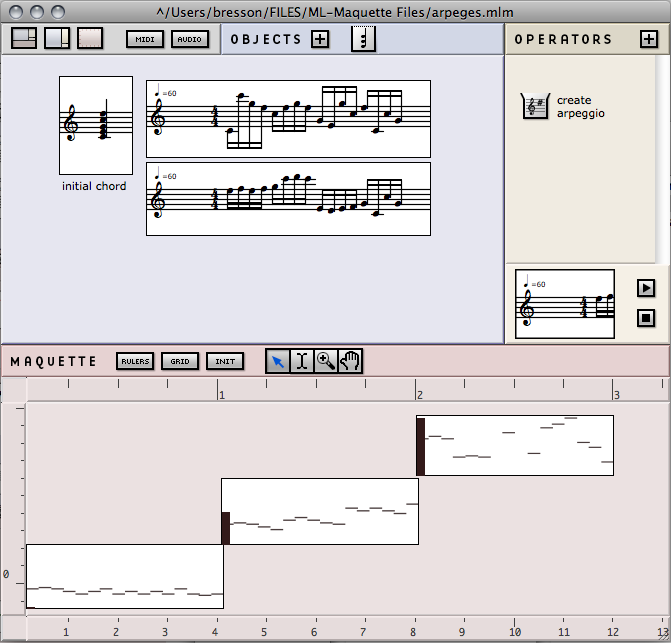

OpenMusic was the main project I worked on when I was at IRCAM. This visual programming language based on Lisp was initially developed by Carlos Agon and Gérard Assayag while I was still in the playgrounds. I created and released the first multi-platform version of the environment (OM5 for Mac/Windows) in 2005. OM6 was then released just a few years later, in 2008. It is still the version that is used today ! In 2012 I started to collaborate with Anders Vinjar with the support of the Norwegian centers BEK and Notam, on a Linux version that is now also available. OpenMusic has grown to be a reference environment for computer-assisted music composition. It is today used and taught by many composers in many institutions worldwide. While continuing to maintain and support OpenMusic, in recent years I started a new implementation of the environment as a context and outcome of research projects, and progressively focused on what has now become OM#. Links:

|

| Cosmologies of the Concert Grand Piano (2019-2020) |

|---|

I was the Technical Project coordinator of Aaron Einbond's Vertigo S+T+ARTS residency Cosmologies of the Concert Grand Piano. In this project, we brought together the results of recent research projects on granular/concatenative synthesis, spatialization (and a little bit of machine learning), in order to implement a model of spatial synthesis based of 3D-radiation patterns of acoustic instruments, running on Max and OM#. Cosmologies was premiered at the Ircam Live concert at au Centre Pompidou, Paris on the 5/03/2020. → S+T+ARTS project page → Aaron's Blog post → Aaron's Interview → Aaron's Ircam talk |

| PACO: Artificial Intelligence and Computer-Assisted Composition (2018-2019) |

|---|

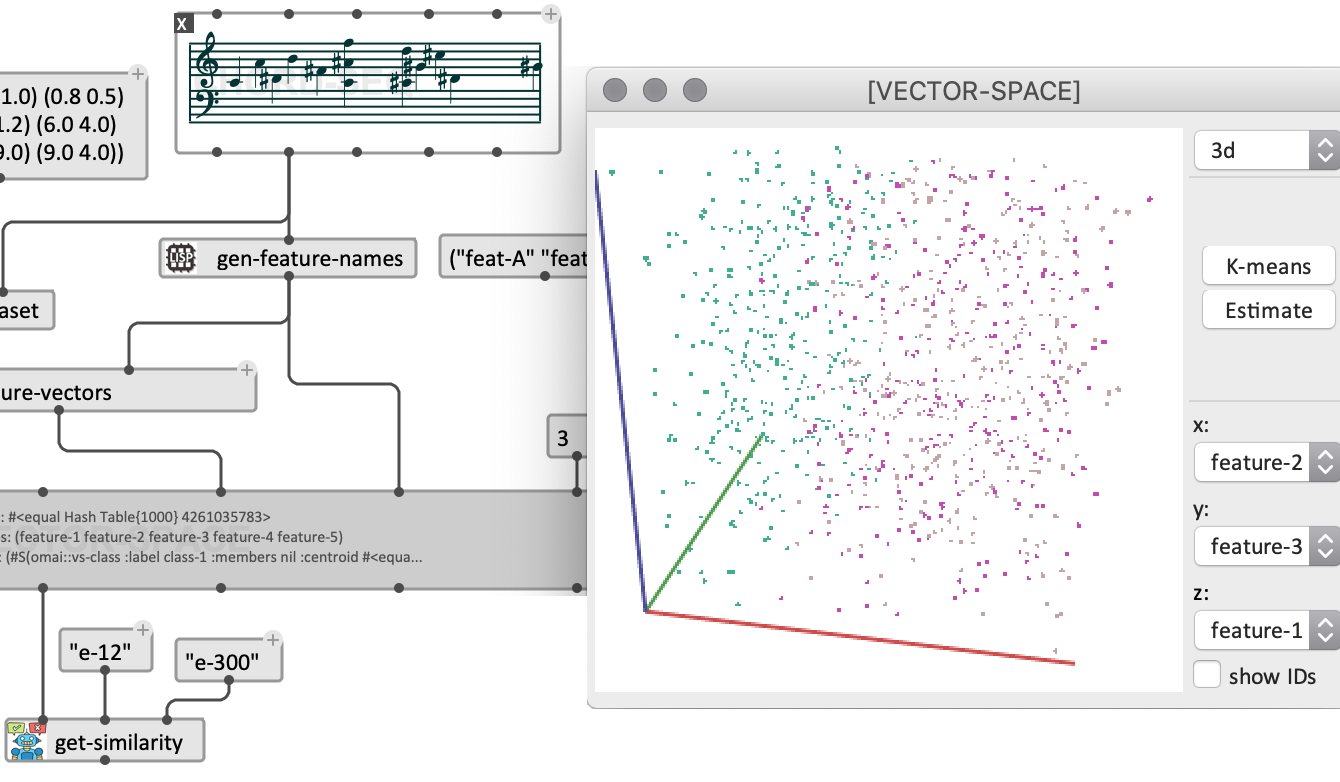

PACO is a start-up funding from the CNRS "PEPS" program for an exploratory project about artificial intelligence (just like everybody else!), machine learning and computer-assisted composition. With this project our objective was to explore the possibility for composers to use AI and machine learning techniques within the framework of creative processes (and not as a substitution or complement to creativity), for the generation or transformation of musical data, or the resolution of musical problems dealing with abstract characteristics of musical structures, which are sometimes difficult to quantify and treat explicitly by classical programming techniques. Composers Anders Vinjar, Alireza Farhang, as well as Paul Best (software engeneering intern, co-supervised with Diemo Schwarz) were my main collaborators on this project. Anders Vinjar is currently following-up on it, in the context of a musical research residency at IRCAM (2019-2020). → PACO project page |

| Symbolist (2018) |

|---|

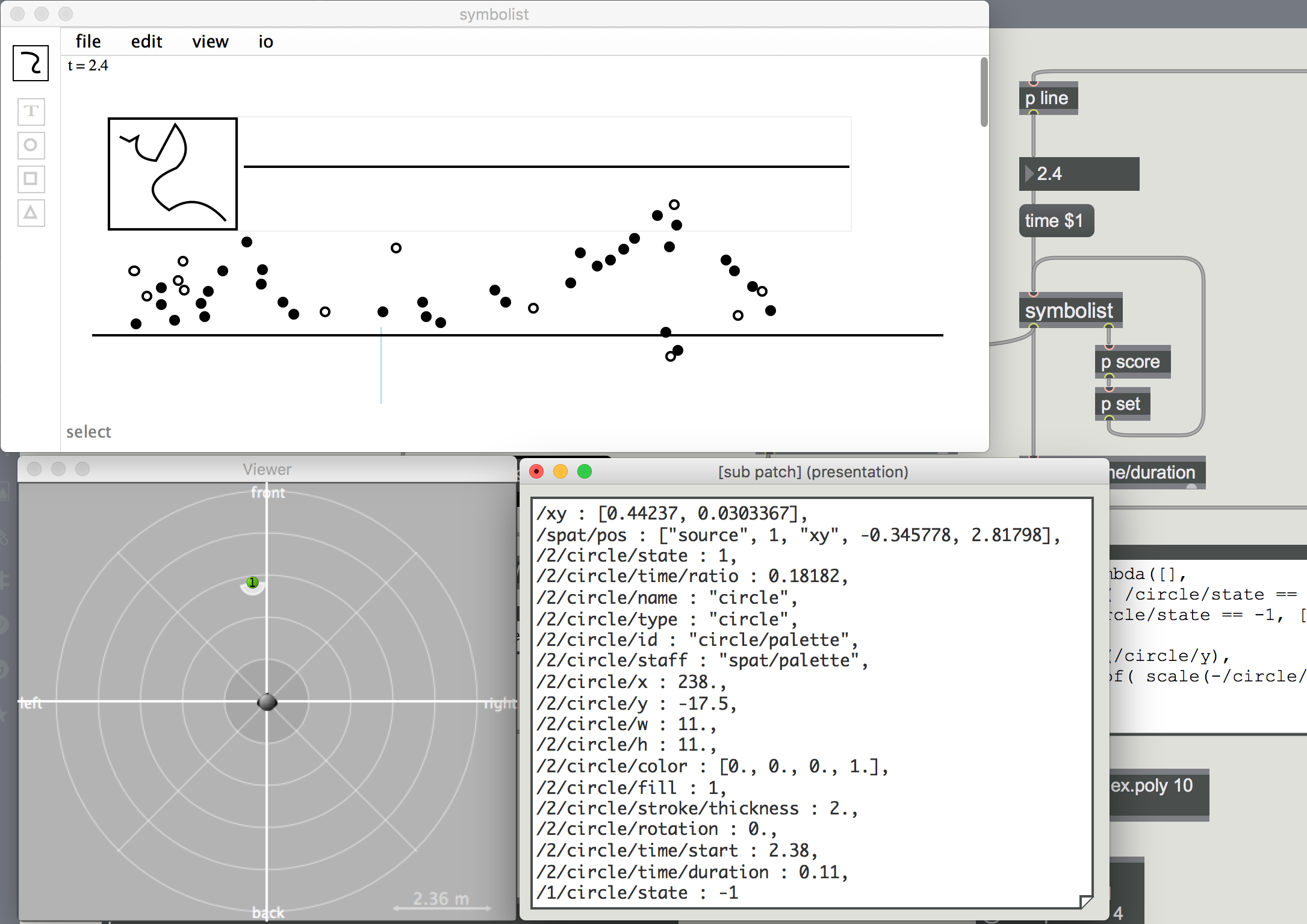

Symbolist is a collaboration with composer Rama Gottfried, started in 2018 during his musical research residency at IRCAM. Our objective was to create a graphic notation environment for music and multimedia, enabling graphical editing, programming, and streaming of multi-rate/multidimensional control data encoded as OSC structures. Vincent Iampietro (Master's student) also worked on the develoment of the current prototype. Rama is now continuing this project at the Hochschule Für Musik in Hamburg. |

| o.OM: Experiments with CNMAT's odot library (2016) |

|---|

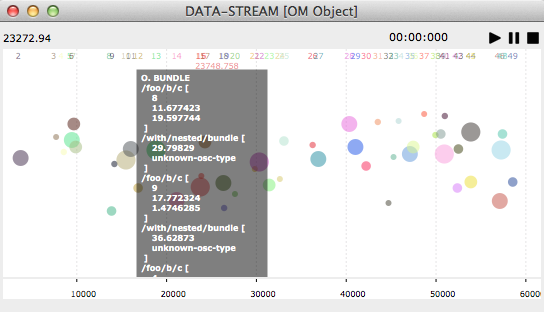

I was at UC Berkeley in 2016 in a Fulbright Research scholarship, where I had the chance to collaborate with John MacCallum, Andrian Freed and other CNMAT fellows on the development of applications of the odot library in OM/OM#, bringing end-user prgramming down to the core of OSC communication. → FARM'16 Paper |

| DYCI2 : Creative Dynamics of Improvised Interaction (2015-2018) |

|---|

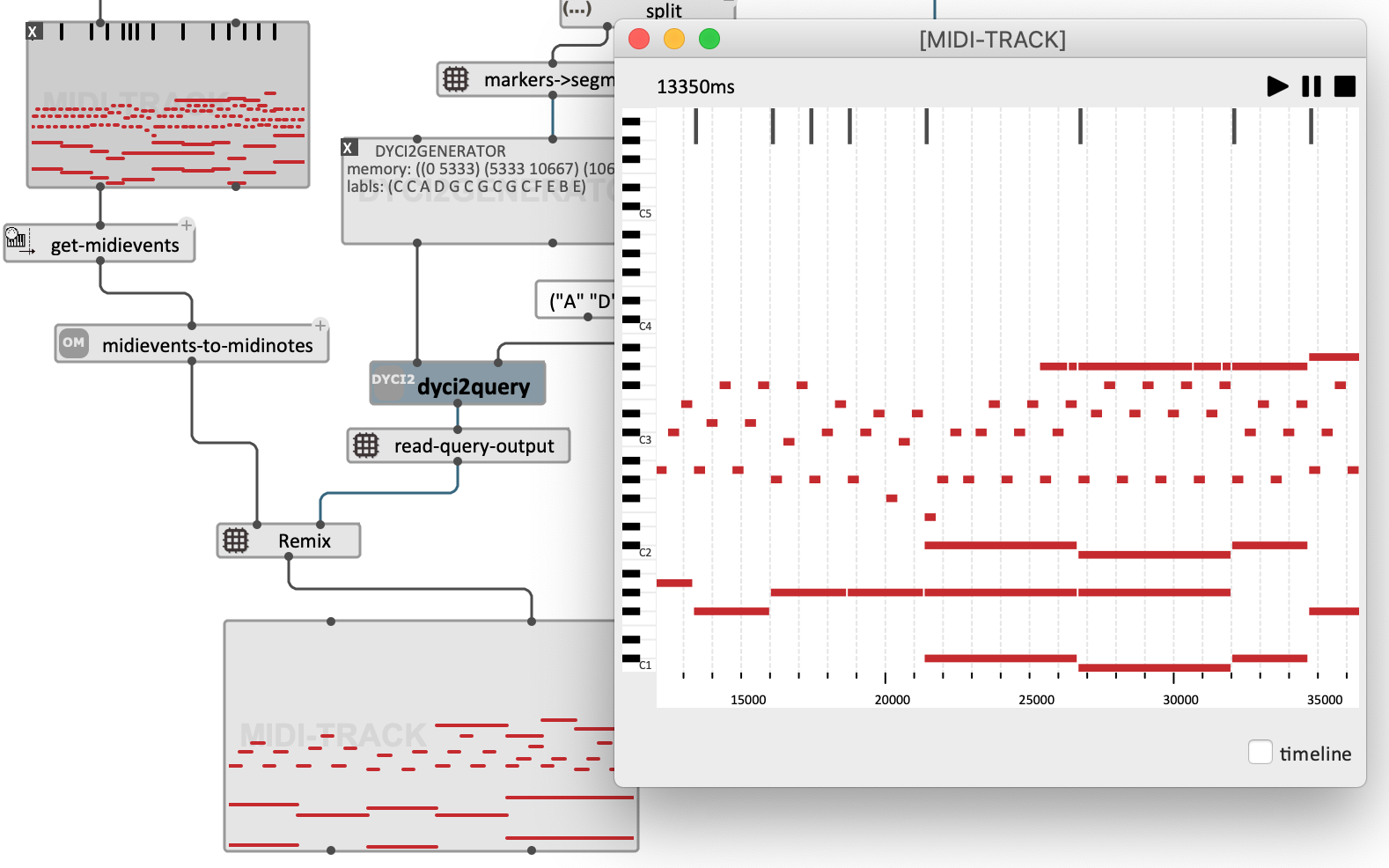

DYCI2 explores the Creative Dynamics of Improvised Interactions between human and artificial agents, featuring an Informed Artificial Listening scheme, a Musical Structure Discovery and Learning scheme, and a generalized Interaction / Knowledge / Decision dynamics scheme. → DYCI2 poject pages In line with a tradition of research and environments developed in the Music Representation team at Ircam, the DYCI2 library created by Jérôme Nika allows musicians to interact with improvising agents, adding up to its predecessors such as OMax the notion of scenario guidance, previously developed in the Improtek project. Together we integrated this in the OpenMusic/OM# environments, allowing for hybrid offline/interactive processing and generation of musical structures. → OM-DYCI2 |

| EFFICACe: Interactivity in Computer-Assisted Composition Processes (2013-2017) |

|---|

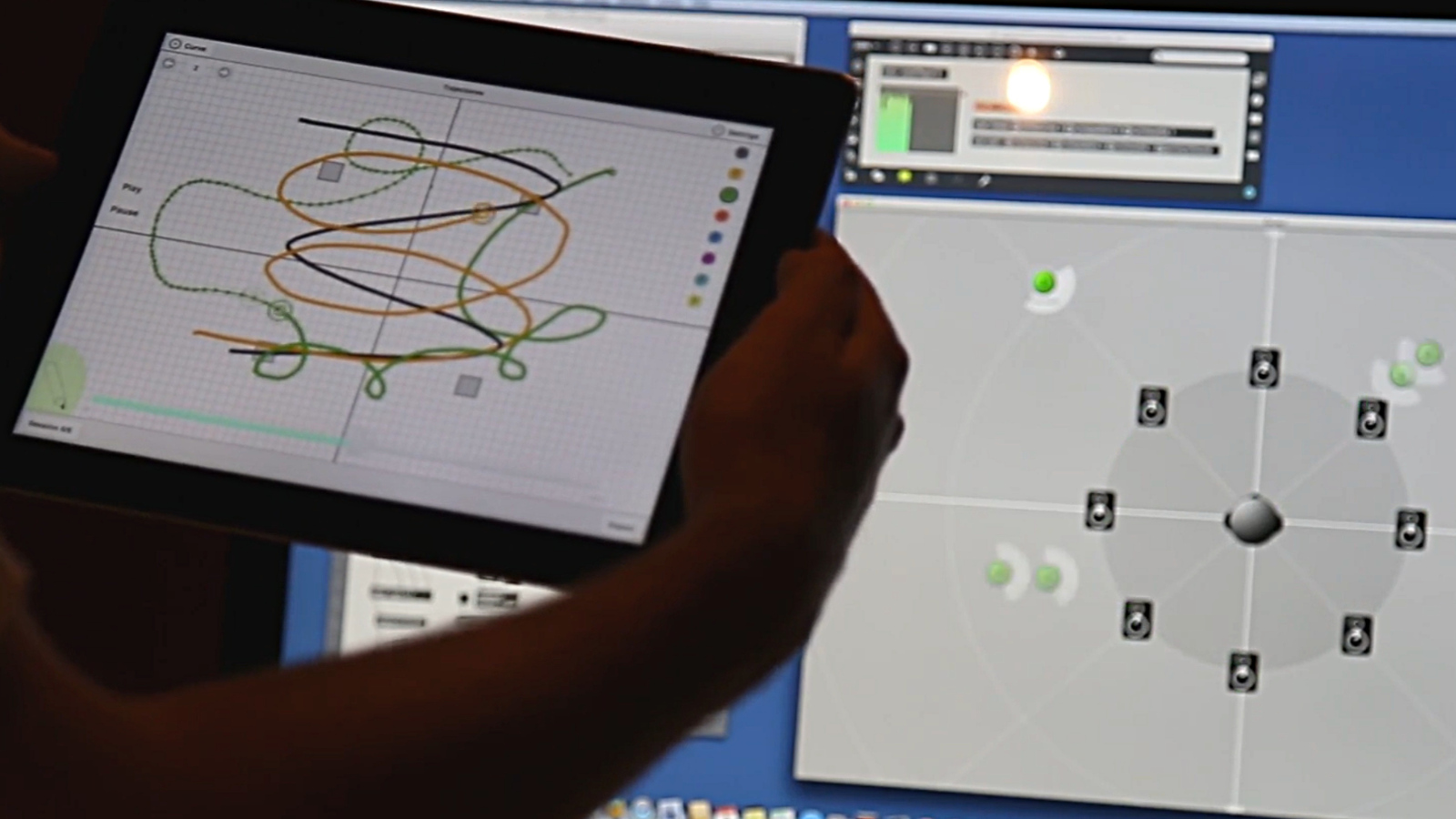

EFFICACe was an exploratory research project that I coordinated at Ircam, funded by the French National research Agency. The project explored the relations between computation, time and interactions in computer-aided music composition, using OpenMusic and other technologies developed at IRCAM and at CNMAT. Considering computer-aided composition out of its traditional "offline" paradigm, we tried to integrate compositional processes in structured interactions with their context: the interactions taking place during executions or performances, or at the early compositional stages (in the processes that lead to the creation of musical material). In this perspective we have studied reactive approaches for computer-aided composition, the notion of dynamic time structures in computation and music, rhythmic and symbolic time structures, and new modalities for the interactive control, visualisation and execution of sound synthesis and spatialization processes. I have worked on this project together with Dimitri Bouche (PhD student), Jérémie Garcia (post-doctoral researcher), Thibaut Carpentier (CNRS/Ircam), Diemo Schwarz (Ircam) and Florent Jacquemard (Inria/Ircam). EFFICACe research also a grounding motivation for the development of interactive visual programming and dynamic scheduling in of the OM# environment. → Project pages |

| SampleOrchestrator: OM-Orchidée (2010-2012) |

|---|

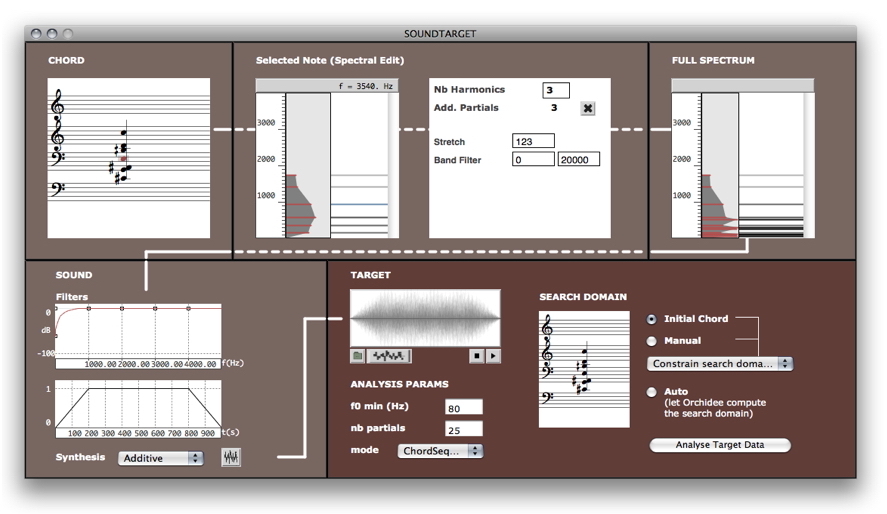

During the SampleOrchestrator project (ANR-06-RIAM-0027) I created (with Grégoire Carpentier) a client for the Orchidée orchestration software in OpenMusic. Orchidée was a orchestral constraint solver implemented as a Matlab server commubicating through OSC. The OpenMusic client (OM-Orchidée) consisted in a set of objects for the specification of orchestral constraints, the generation of "sound targets" (a mix of symbolic and audio descriptors), and the decoding/exploration of orchestral solutions proposed by Orchidée.

→ CMJ Paper

During the SampleOrchestrator project (ANR-06-RIAM-0027) I created (with Grégoire Carpentier) a client for the Orchidée orchestration software in OpenMusic. Orchidée was a orchestral constraint solver implemented as a Matlab server commubicating through OSC. The OpenMusic client (OM-Orchidée) consisted in a set of objects for the specification of orchestral constraints, the generation of "sound targets" (a mix of symbolic and audio descriptors), and the decoding/exploration of orchestral solutions proposed by Orchidée.

→ CMJ Paper

|

| Sound processing / synthesis in OpenMusic |

|---|

|

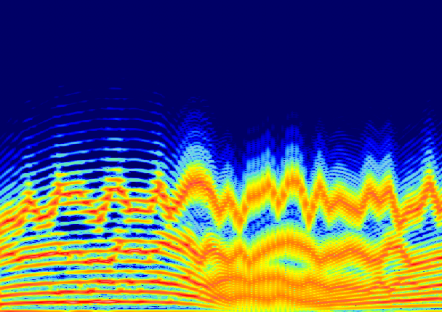

The work and research carried out in my PhD thesis (2004-2007) was focused on the integration of sound processing and synthesis techniques in compositional processes (and in the OpenMusic environment). I have created several external libraries connecting with IRCAM sound processing tools, such as OM-SuperVP, OM-pm2, or OM-Chant (see project below). OMChroma is another project and long-lasting collaboration with composer Marco Stroppa, providing high-level tools and structures for the control of sound synthesis via Csound. |

| OM-Chant (2010-2012) |

|---|

OM-Chant is a library for the control of Ircam's Chant synthesizer in OpenMusic. Developed in the early 80s based on formant-wave functions synthesis (FOF), Chant remains even today a quite unique tool for the synthesis of "voiced" sounds (sung vowels) and its continuous control. Marco Stroppa's opera Re Orso (2012) was an important moment in this project development and the first important application of OM-Chant in such a wide production. → OM-Chant wiki |

| Spatialization Control (2008-2019) |

|---|

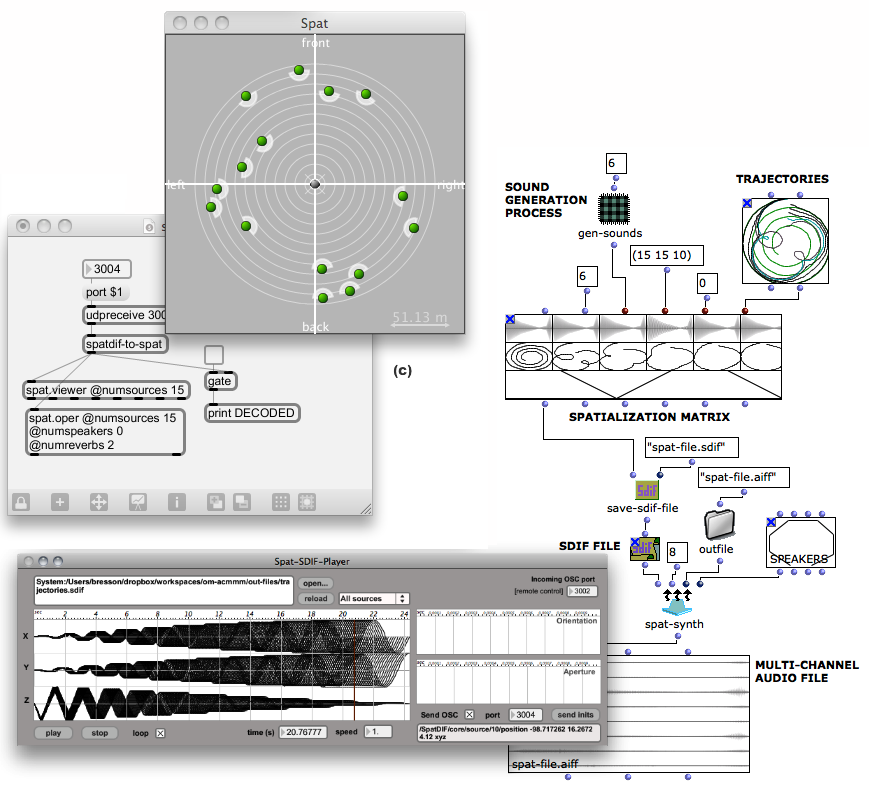

The next step after sound synthesis was to integrate sound spatialization in compositional processes. I created OM-Spat 2: a library for the generation of sound spatialization data and 2D/3D trajectories, connected to an offline renderer made with the Ircam Spat library. OM-Spat used SDIF files for the encoding and storage of trajectories, which could be read by Spat-SDIF-Player, a companion tool created in Max for real-time streaming via OSC. In the same period I collaborated with Marlon Schumacher to the development of OMPrisma. OMPrisma extends OMChroma's paradigm and Csound-based techiques for the high-level of sound synthesis, allowing to merge Csoudn audio synthesis, processing and spatialization instruments in a comprehensive "spatial sound synthesis" framework (see for instance this paper). More recently, OM# developements came along with a powerful new integration of Spat, embedding both audio processing units and GUI controllers. |

| Musique Lab 2 (2004-2010) |

|---|

Musique Lab 2 was a commission to Ircam by the French Ministry of Education (and my first real work contract at Ircam!) The idea was to provide tools for music teachers to use computer-assisted composition technology to run music classes, allowing them to interactively demonstrate and workshop musical concepts and compositional processes from repertoires ranging from early to contemporary music. More concretely, it consisted in a single-window application developed on top of OpenMusic, including most of its underlying features and inbuilt "musical knowledge" (+ some additional features such as the notion of tonality!), whithout the visual programming aspect. The application was released for Mac and Windows (it is at the origin of the first Windows port of OpenMusic, which led to the release of OM5 in 2005) and distributed to music teachers through the Ircam Forum and the Educ'Net networks. Unfortunately the project was not supported much longer after the initial releases. |

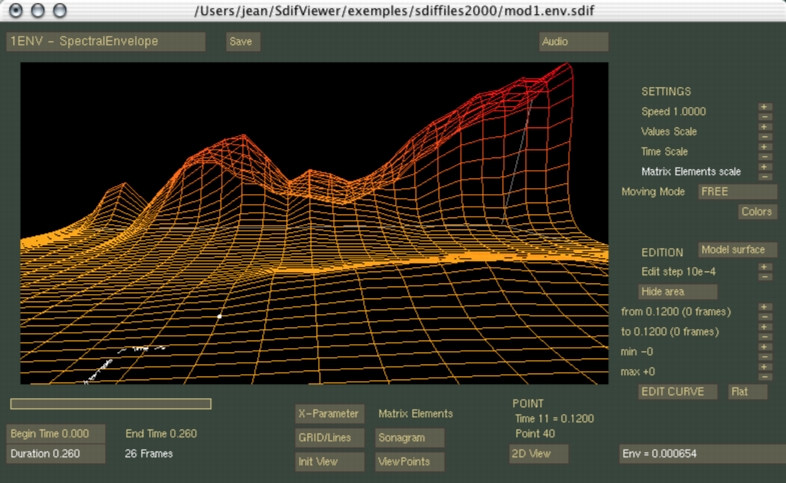

| SDIF-Edit (2003-2004) |

|---|

SDIF-Edit is a student internship project that I developed when I started at IRCAM in 2003. It is a 3D visualizer and editor developed in OpenGL, allowing to view the contents of SDIF files (Sound Description Interchange Format, a file format used by music applications to store and exchange sounds analysis data, synthesis parameters, etc.) One of the objectives was to embed such sound description data in compositional processes via an integration in of this editor in OpenMusic. This idea was brought a few steps further some years later in the context of Savannah Agger's Landschaften residency project at Ircam (2017). → SDIF-Edit on GitHub |